As I've mentioned in my last article, I'm interested in the implementation details and the security of open and closed-source GPU drivers.

In addition to the security implications of the model that is used by some of the current drivers (they allow the OpenGL client to send commands directly to the GPU, with the kernel only checking for illegal address references in the command stream, instead of using an actual IOMMU), there is a much simpler way to cause mischief when given access to an accelerated OpenGL implementation on a system: Uninitialized buffers.

Normally, when requesting memory from the operating system (for example through the malloc standard library function, which in turn uses an anonymous, private mmap memory mapping), the kernel goes through the effort of zeroing out the contents of the newly allocated chunk of main memory. While this is not required by the C language specification in any way, and one should never rely on that implementation detail (smaller allocations could be handled by the library in a different way, and those are not guaranteed to be zero-initialized), it's a pretty important security feature.

Just imagine what would happen if the physical memory block used to be allocated to your browser, and contained the session cookie for an online banking session, or worse, an instance of GPG, containing your private key... And while most security-relevant code will probably go to great lengths to avoid that kind of thing from happening by overwriting the relevant memory locations before deallocating them, there is always the possibility of application crashes, which will render those protections useless.

All in all, that operating system feature is really essential to guarantee the isolation among different users who are working on the same machine simultaneously. (As a side note, a similar thing is performed for some file systems, which don't zero out newly-allocated blocks, but use a different method to achieve a similar effect, and to prevent users from gaining access to residual chunks of data in case of a system crash during the allocation.)

I was expecting the same thing to happen for GPU driver implementations, since nowadays, many window managers use OpenGL acceleration to draw the window contents to the right locations with various effects like transparency or animated window switching. Basically, the window content is stored as an OpenGL texture, which is later mapped to a rectangle on the graphical desktop. So, in many cases, their content is at least as security-critical as the content of main memory – just think about your terminal's or browser's window content. Well, it turns out I was wrong:

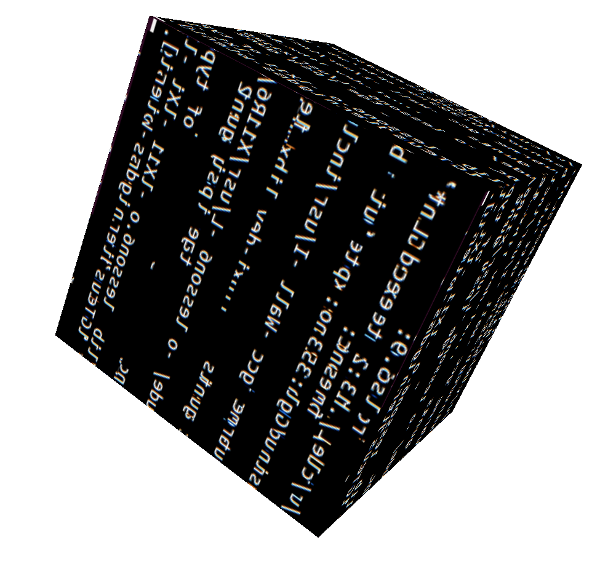

This screenshot shows a simple OpenGL demonstration program, which I modified just a tiny bit: I removed the part that loads the cube texture from memory, or more accurately, replaced the pointer to the image data with a null pointer (which seems to be allowed by the OpenGL specification). It is implementation-defined whether that means that the buffer should be zero-initialized, or can remain uninitialized – and the nouveau driver for my Nvidia card seems to do the latter, apparently for performance reasons.

I asked the nouveau developers in the IRC channel for their view on the topic, and Dave Airlie told me that while video buffers in the main memory should be zero-initialized on nouveau, buffers residing in video memory are not overwritten by default, while theoretically possible.

On integrated GPUs that use the main memory for all of their buffers, the problem could be even more severe – not only the content of other user's windows, but even arbitrary memory contents could be theoretically extracted with custom shader code. I retried the experiment on an Intel GPU, and was relieved to only see an untexturized black cube. The same thing happens on Android, where I tried it on both an Adreno- and an Nvidia Tegra–equipped device. However, this does not mean that those platforms are safe – it only means that somewhere in their OpenGL implementation, the buffer is zeroed, which might as well happen only in the userspace library, and could therefore be circumvented by directly interfacing with the command buffer (which is admittedly much more difficult, and might well be impossible for things like WebGL, where direct access to those buffers is not possible for application code).

One possible mitigation for that security risk is very simple, and therefore widely used: Just don't give access to the video hardware to anyone but users that are physically present. Many Linux distributions do just that with the allowed_users=console setting of the Xwrapper.config configuration file. This reduces the attack surface significantly – most computers are only used for desktop logins by a single person at a time, and anybody who is able to run software in that user's X session (which seems to be an additional requirement for GPU hardware access, at least on DRI/DRM) has much easier ways to grab arbitrary window contents.

But with WebGL becoming more and more popular, that situation is changing – now, web page authors can execute OpenGL code on any visitor's GPU hardware, and read back the content of the resulting images (with limits imposed by the same-origin policy). This might be one of the reasons why WebGL specifically mandates that implementations clear their buffers before allocation. That's obviously a very good idea, seeing that there is even a working exploit for that particular loophole! Now let's hope that all browser vendors read that part of the specification carefully, and we should be safe – but only against that specific security threat of running untrusted code on hardware with direct access to the main memory...

There are comments.